Azure ML (misleading) charts

tl;dr don’t trust Azure ML charts.

In a recent project, we relied heavily on Azure ML workspaces. All of our models had been deployed as Azure-managed online endpoints. Letting Azure manage the endpoints was the wise thing to do, given that we had limited personnel to keep a sane Kubernetes ecosystem.

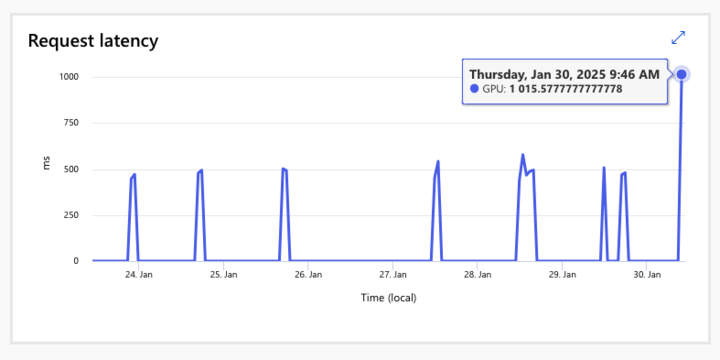

Nonetheless, we found the endpoint latency a bit too high. The first question we did was “is it really using the GPU?” The Azure charts confirmed it was.

There was something weird with that chart though. The best thing to troubleshoot the high latency would be SSHing into that endpoint, but that wasn’t possible. The next best thing was mirroring the endpoint in a VM we could control, and run some profiling.

Much to my surprise, when we mirrored the endpoint on this new VM with the exact same code, nvtop showed zero GPU activity. After some investigation, I found out that a certain node in our ONNX model made inference engine to fallback to the CPU execution provider.